put slow tasks/computation as background job, so web services could always respond fast.prepare for nth server when it's already 70% load (you need to provision a new node anyway if kubernetes also hit the load), either manually or use cloud providers' autoscaler.When you only have production and test environment without A/B or canary deployment.Īgain, Kubernetes will be totally overkill in this case.When you are alone or in a small team (no other team), writing modular monolith is fine until it's painful to build, test, and deploy, then that's the moment that you will need to split to multiple services.

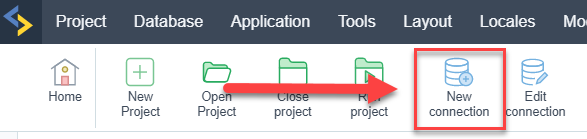

SCRIPTCASE SLOW DEPLOY CGI FAST CGI MANUAL

Seriously you don't need it, just a simple rsync script (either manual or in CI/CD pipeline) or Ansible script would be sufficient

SCRIPTCASE SLOW DEPLOY CGI FAST CGI CODE

Why? normally if you code using compiled programming language, the bottleneck is not the network/number of hits, but the database, which usually solved with caching if it just read, or CDN if static files. When you are using slow programming language implementation that the first bottleneck is not database but your API/Web requests implementation.Why? creating canary-like deployment manually (setting up load balancer to send a percentage of request to specific nodes is a bit chore)Īlternative? use Nomad and Consul service registry Why? with orchestration service we can binpack and fully utilize those servers better than creating bunch of VMs inside the serversĪlternative? use Ansible to setup those servers, or Nomad to orchestate, or create a script to rsync to multiple servers If you have more than 3+ powerful servers, it's better to use Kubernetes or any other orchestration service.overseer if you are using Golang) or if you are using dynamic languages (or old CGI/FastCGI) that are not creating own web server usually you don't need an autoreloader since source code on the server anyway and will be autoreloaded by the web server Why? because that way they can deploy each services independently, and service mesh management is far easier if using orchestration.Īlternative? use Nomad or standard rsync with autoreloader (eg. If you have lot of teams (>3, not 3 person, but 3 teams) and services, it's better to use Kubernetes or any other orchestration service to utilize your servers.If your application is handling big file upload, you may need to adjust its value, otherwise you will get HTTP 500 error if you try to upload big file. Since Apache 2.3.9, its default value is 128 KB. If you running FastCGI web application with mod_fcgid module, FcgidMaxRequestLen limits number of bytes of request length. For example, in Apache, LimitRequestBody puts limit total in bytes of request body. There are configuration that affect our application. Read Permission issue with SELinux for more information. Running FastCGI application may be subject to strict security policy of SELinux. Read Issue with firewall for more information.

In Fedora-based distribution, firewall is active by default. Where /tmp/fano-fcgi.sock is socket file which application using and of course it must be writeable by Nginx.

0 kommentar(er)

0 kommentar(er)